Facebook rolls out strategies to curb spread of fake news

A free daily email with the biggest news stories of the day – and the best features from TheWeek.com

You are now subscribed

Your newsletter sign-up was successful

Facebook announced Thursday its plans to address the spread of fake news, an apparent response to critics who have blamed the site for making it easy to peddle misinformation or even swing the election. The social media network has long been cautious about interfering with what gets shared, not wanting to be, as The New York Times puts it, "an arbiter of truth." But while "we really value giving people a voice … we also believe we need to take responsibility for the spread of fake news on our platform," said Adam Mosseri, a Facebook vice president.

Facebook plans to experiment with gutting fake news websites' revenue by scanning third-party links for pages that are mainly advertising, or are pretending to be another website, like a fake New York Times. Users will also be able to report posts that appear to be fake:

Users can currently report a post they dislike in their feed, but when Facebook asks for a reason, the site presents them with a list of limited or vague options, including the cryptic "I don't think it should be on Facebook." In Facebook's new experiment, users will have a choice to flag the post as fake news and have the option to message the friend who originally shared the piece to let them know the article is false.If an article receives enough flags as fake, it can be directed to a coalition of groups that would perform fact-checking, including Poynter, Snopes, PolitiFact and ABC News, among others. Those groups will check the article and can mark it as a "disputed" piece, a designation that will be seen on Facebook.Disputed articles will ultimately appear lower in the News Feed. If users still decide to share disputed articles, they will receive pop-ups reminding them that the accuracy of the piece is in question. [The New York Times]

Opinion pieces, or satirical ones like those published by The Onion, will not be affected by the changes, Facebook said.

The Week

Escape your echo chamber. Get the facts behind the news, plus analysis from multiple perspectives.

Sign up for The Week's Free Newsletters

From our morning news briefing to a weekly Good News Newsletter, get the best of The Week delivered directly to your inbox.

From our morning news briefing to a weekly Good News Newsletter, get the best of The Week delivered directly to your inbox.

"The fake cat is already out of the imaginary bag," explained Emily Bell, the director at the Tow Center for Digital Journalism at Columbia University. "If [Facebook] didn't try and do something about it, next time around it could have far worse consequences."

A free daily email with the biggest news stories of the day – and the best features from TheWeek.com

Jeva Lange was the executive editor at TheWeek.com. She formerly served as The Week's deputy editor and culture critic. She is also a contributor to Screen Slate, and her writing has appeared in The New York Daily News, The Awl, Vice, and Gothamist, among other publications. Jeva lives in New York City. Follow her on Twitter.

-

What are the best investments for beginners?

What are the best investments for beginners?The Explainer Stocks and ETFs and bonds, oh my

-

What to know before filing your own taxes for the first time

What to know before filing your own taxes for the first timethe explainer Tackle this financial milestone with confidence

-

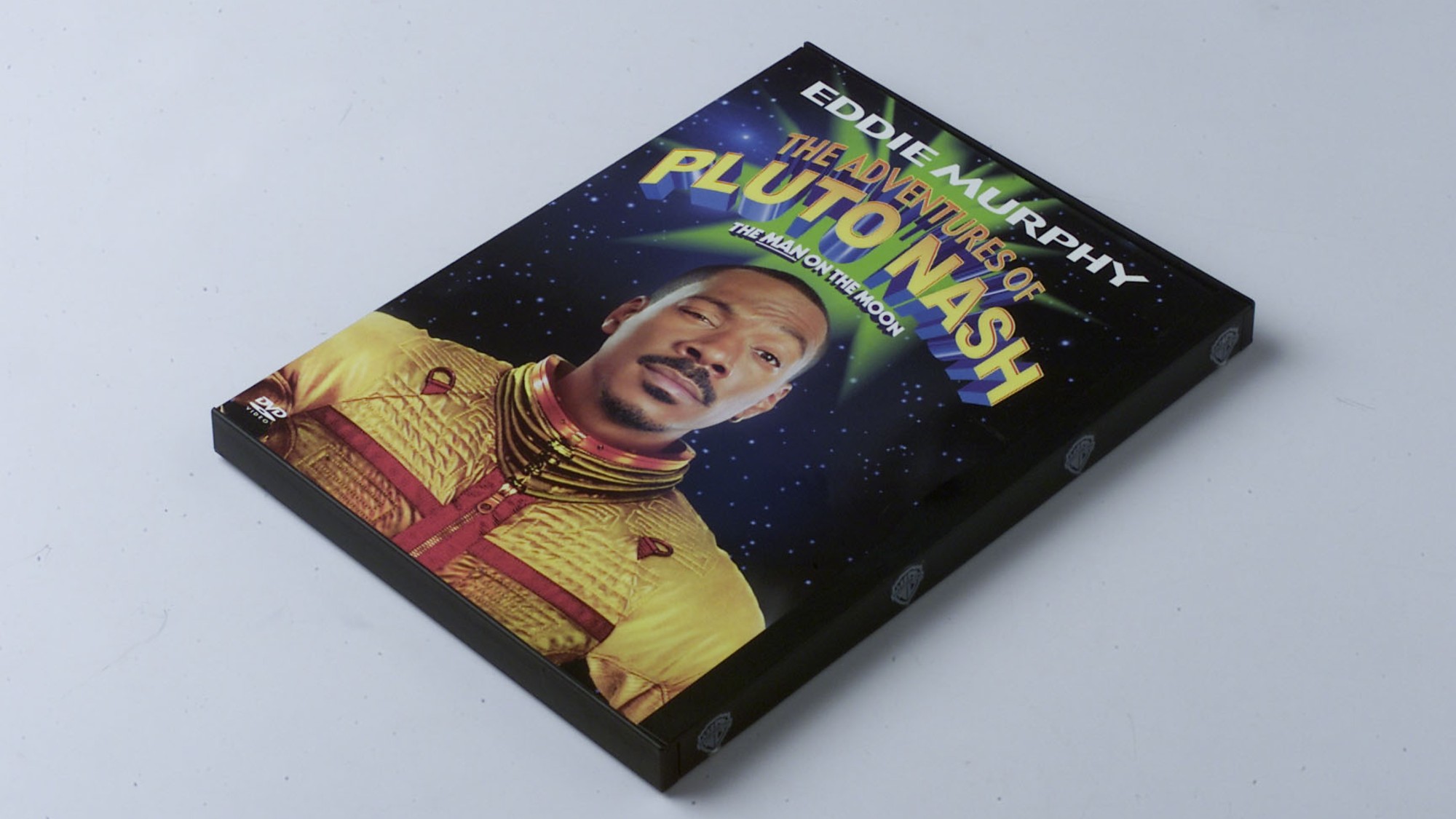

The biggest box office flops of the 21st century

The biggest box office flops of the 21st centuryin depth Unnecessary remakes and turgid, expensive CGI-fests highlight this list of these most notorious box-office losers

-

TikTok secures deal to remain in US

TikTok secures deal to remain in USSpeed Read ByteDance will form a US version of the popular video-sharing platform

-

Unemployment rate ticks up amid fall job losses

Unemployment rate ticks up amid fall job lossesSpeed Read Data released by the Commerce Department indicates ‘one of the weakest American labor markets in years’

-

US mints final penny after 232-year run

US mints final penny after 232-year runSpeed Read Production of the one-cent coin has ended

-

Warner Bros. explores sale amid Paramount bids

Warner Bros. explores sale amid Paramount bidsSpeed Read The media giant, home to HBO and DC Studios, has received interest from multiple buying parties

-

Gold tops $4K per ounce, signaling financial unease

Gold tops $4K per ounce, signaling financial uneaseSpeed Read Investors are worried about President Donald Trump’s trade war

-

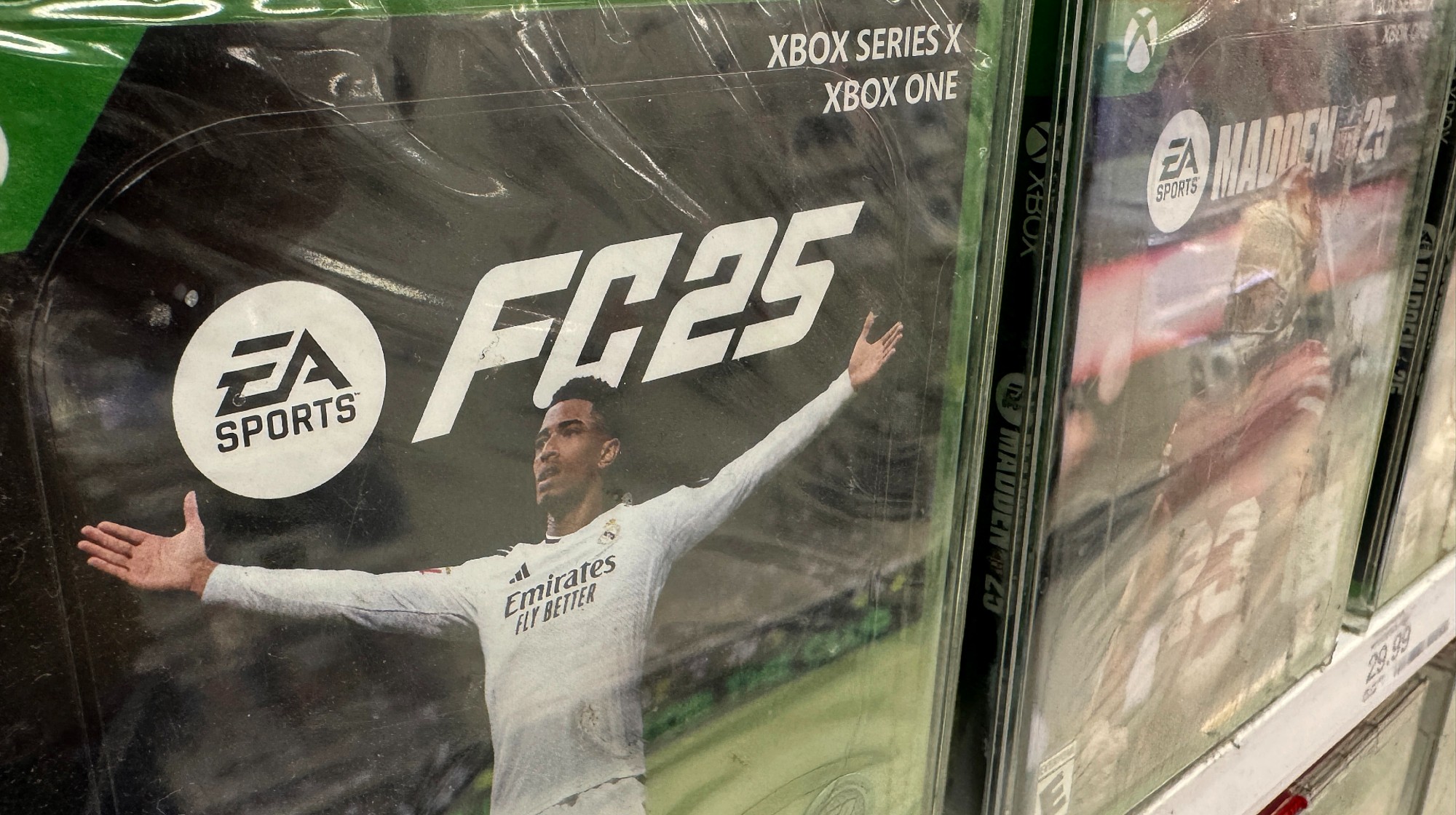

Electronic Arts to go private in record $55B deal

Electronic Arts to go private in record $55B dealspeed read The video game giant is behind ‘The Sims’ and ‘Madden NFL’

-

New York court tosses Trump's $500M fraud fine

New York court tosses Trump's $500M fraud fineSpeed Read A divided appeals court threw out a hefty penalty against President Trump for fraudulently inflating his wealth

-

Trump said to seek government stake in Intel

Trump said to seek government stake in IntelSpeed Read The president and Intel CEO Lip-Bu Tan reportedly discussed the proposal at a recent meeting