Pros and cons of social media content moderation

Where do you draw the line between online safety and freedom of speech?

A free daily email with the biggest news stories of the day – and the best features from TheWeek.com

You are now subscribed

Your newsletter sign-up was successful

The Supreme Court recently agreed to take on two cases at the center of an ongoing content moderation debate, where it will decide "whether states can essentially control how social media companies operate," CNN reported. The justices will be considering laws passed in Texas and Florida in 2021 that "could have nationwide repercussions for how social media — and all websites — display user-generated content," the outlet added.

Both sides of the political divide have heavily scrutinized Big Tech and their policies over content removal in the past. Democrats have pushed for more moderation of user-generated content, while Republicans claim that social media companies are overstepping and excessively targeting content from the conservative right, an allegation former President Donald Trump has repeated several times.

Pro: It protects the public from harmful content and misinformation

For some, content moderation is the first line of defense against spreading misinformation and content that could be harmful to users. It can protect young consumers from cyberbullying or flag content that spreads misinformation, which became a point of contention at the height of Covid-19. “Content moderation is really about human safety," argued Alexandra Popken, VP of Trust and Safety of WebPurify, a content moderation company. The goal of moderation is "to proactively detect and remove harms before they materialize and impact real people," Popken added, "or to respond and react as quickly as possible once they have materialized.”

The Week

Escape your echo chamber. Get the facts behind the news, plus analysis from multiple perspectives.

Sign up for The Week's Free Newsletters

From our morning news briefing to a weekly Good News Newsletter, get the best of The Week delivered directly to your inbox.

From our morning news briefing to a weekly Good News Newsletter, get the best of The Week delivered directly to your inbox.

Con: It negatively impacts the mental health of moderators

Those tasked with scouring thousands of potentially jarring posts, images and videos have complained that the work hurts their mental health and that companies don't offer adequate resources to help those suffering as such. In June, hundreds of social media moderators for outsourcer TELUS International in Germany called on lawmakers to improve their work conditions, "citing tough targets and mental health issues," Reuters reported. They were "led to believe the company had appropriate mental health support in place, but it doesn't. It's more like coaching," Cengiz Haksöz, a former content moderator at TELUS International, told the outlet. "And these outsourcers are helping the tech giants get away from their responsibilities."

Pro: It protects a company's brand

Content moderation enables brands to control their reputation, protecting them from inflammatory content that could harm their users or alienate them from advertisers. "Illicit submissions that unalign with a brand’s values can quickly turn products intended to spread positivity into something far more sinister," Jonathan Freger, co-founder and CTO of WebPurify, said in Forbes. A lack of content moderation "allows for harmful UGC to slip through the cracks and threaten the user experience and brand reputation," he added.

Con: It opens the door for 'digital authoritarianism'

Content moderation has snowballed, and the "collateral damage in its path" was ignored, Evelyn Douek said in Wired. And the push for more moderation in the U.S. has had "geopolitical costs, too"; some authoritarian governments "pointed to the rhetoric of liberal democracies in justifying their own censorship." Western governments have "largely left platforms to fend for themselves in the global rise of digital authoritarianism," she noted. "Governments need to walk and chew gum in how they talk about platform regulation and free speech if they want to stand up for the rights of the many users outside their borders."

Pro: It puts the onus on social media companies to keep platforms safe

Not that we don't benefit from using our discretion in determining which content to interact with, but social media platforms are still responsible for fostering a safe environment for all users. Having content moderation tools and policies in place is a part of how companies assume the responsibility of safe platforms, especially for marginalized people who find solace and community online. Often, those communities can become the targets of content that threatens that safety, "which is where content moderation is crucial," Popken, WebPurify's VP of Trust and Safety, said in an interview with Tech HQ.

A free daily email with the biggest news stories of the day – and the best features from TheWeek.com

Con: It is anti-free speech

One of the prevailing arguments against content moderation is that it is inherently anti-free speech, especially when done at the behest or under the influence of government officials. In June, a U.S. federal court issued an injunction barring the government from contacting social media platforms about moderating posts protected by the First Amendment, Quartz reported. The decision came in response to a lawsuit filed by Republican attorneys general who alleged that the government was censoring free speech on social media platforms under the guise of combatting Covid-related or election misinformation. In his ruling, Judge Terry A. Doughty wrote that the case “arguably involves the most massive attack against free speech in United States’ history.” He concluded that the evidence depicted "an almost dystopian scenario," wherein the government assumed a role "similar to an Orwellian 'Ministry of Truth.'"

Theara Coleman has worked as a staff writer at The Week since September 2022. She frequently writes about technology, education, literature and general news. She was previously a contributing writer and assistant editor at Honeysuckle Magazine, where she covered racial politics and cannabis industry news.

-

How the FCC’s ‘equal time’ rule works

How the FCC’s ‘equal time’ rule worksIn the Spotlight The law is at the heart of the Colbert-CBS conflict

-

What is the endgame in the DHS shutdown?

What is the endgame in the DHS shutdown?Today’s Big Question Democrats want to rein in ICE’s immigration crackdown

-

‘Poor time management isn’t just an inconvenience’

‘Poor time management isn’t just an inconvenience’Instant Opinion Opinion, comment and editorials of the day

-

Moltbook: The AI-only social network

Moltbook: The AI-only social networkFeature Bots interact on Moltbook like humans use Reddit

-

Are Big Tech firms the new tobacco companies?

Are Big Tech firms the new tobacco companies?Today’s Big Question A trial will determine whether Meta and YouTube designed addictive products

-

Is social media over?

Is social media over?Today’s Big Question We may look back on 2025 as the moment social media jumped the shark

-

Australia’s teen social media ban takes effect

Australia’s teen social media ban takes effectSpeed Read Kids under age 16 are now barred from platforms including YouTube, TikTok, Instagram, Facebook, Snapchat and Reddit

-

Trump allies reportedly poised to buy TikTok

Trump allies reportedly poised to buy TikTokSpeed Read Under the deal, U.S. companies would own about 80% of the company

-

What an all-bot social network tells us about social media

What an all-bot social network tells us about social mediaUnder The Radar The experiment's findings 'didn't speak well of us'

-

Broken brains: The social price of digital life

Broken brains: The social price of digital lifeFeature A new study shows that smartphones and streaming services may be fueling a sharp decline in responsibility and reliability in adults

-

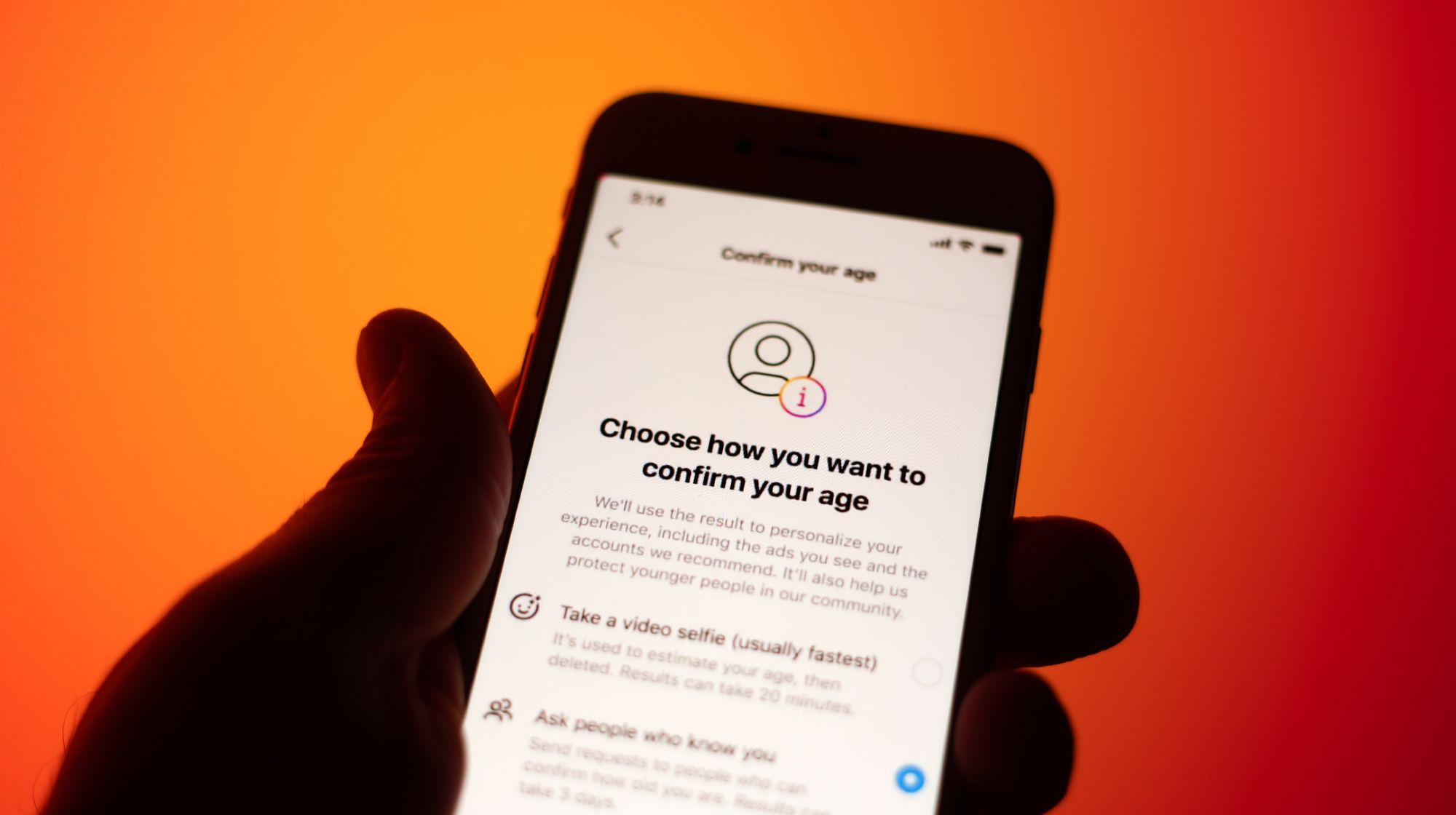

Supreme Court allows social media age check law

Supreme Court allows social media age check lawSpeed Read The court refused to intervene in a decision that affirmed a Mississippi law requiring social media users to verify their ages